TypeScript & SFDX

Table of Contents:

Working with TypeScript is an incredible experience — and one that I can’t recommend highly enough for anybody who feels like they’re starting to get comfortable with JavaScript. In this post, I’ll review how working with TypeScript and SFDX can be mutually beneficial. Whether your goal is to use SFDX within CI/CD, extend the functionality of the CLI in ways that you don’t see being broadly reshareable, or within the context of something like an open source GitHub Action, you can benefit from using TypeScript over vanilla JavaScript so that you can shift left on things like unit testing by making runtime problems into compile-time exceptions.

TypeScript Essentials

I recently had a great time converting a JavaScript repository to TypeScript in order to get better quality of life when developing and testing the project locally. It’s of crucial importance to understand that using TypeScript over JavaScript offers no immediate advantage to something that’s feature-complete, because the code that ends up being executed should be more or less the same (since TypeScript gets converted back into JavaScript when it needs to be run). The benefit comes when any refactoring is necessary; when any functionality needs to be added. It can also help to point out flaws in the existing code — the sort of flaw that could be surfaced through good unit or integration tests, but become strictly unnecessary when working with a strong-enough type system (like TypeScript).

One of the nicest thing about working with TypeScript? It’s a superset of JavaScript. Put another way? All JavaScript is valid TypeScript — which means that you can convert an existing JavaScript project into a TypeScript project purely by changing the file type from .js to .ts. This, in conjunction with an iterative approach to how you build up your tsconfig.json file, allows you to add in compile-time checks on your code as you go:

{

"compilerOptions": {

"esModuleInterop": true,

"module": "commonjs",

"outDir": "./lib",

"rootDir": "./src",

"target": "es6"

},

"exclude": ["**/*.test.ts"]

}That’s a very simple tsconfig.json file, and it’s not going to start surfacing any kind of compile-time checks if we were to simply convert a JavaScript file to a TypeScript file (until you start adding type information to your existing code). In my case, I wanted to immediately surface all of the errors, and adding one single line to the shown tsconfig.json file will really speed up that process:

{

"compilerOptions": {

"esModuleInterop": true,

"module": "commonjs",

+ "noImplicitAny": true,

"outDir": "./lib",

"rootDir": "./src",

"target": "es6",

},

"exclude": ["**/*.test.ts"]

}This will transform any existing JavaScript file into a mess of errors, and the only way to fix them is by starting to add types! One last thing before we really dive in — in Apex, all types are “magically” available to us. That is, once we’ve deployed an interface or class, it can be referenced everywhere (unless you’re doing managed package development, or interacting with managed packages — in this case, there is a difference between classes that are declared as public versus classes that are declared as global, but for the most part this point stands). In most other languages, you have to import your type definitions, and TypeScript is no exception. You can create a “magic” file, global.d.ts, which allows you to create and reference types anywhere within a codebase without directly importing them, but I wouldn’t recommend doing so. As much as is possible, we should try to write code in the idiomatic style of that language, even if it’s different from the style employed by the other languages we use.

Note, as well, that there are additional flags that I recommend using in your tsconfig.json — things like strictNullChecks being set to true will considerably lessen the chance of null pointer exceptions occurring in your code.

Refactoring From JavaScript To TypeScript

Once we’ve flipped that flag within our tsconfig.json, a ton of compilation errors will start to surface — things like this:

src/index.ts:293:33 - error TS7006: Parameter 'argB' implicitly has an 'any' type.

293 function someFunction(argA, argB) {

~~~~~~~~

Found 33 errors in the same file, starting at: src/index.ts:17For dependencies (things that we import from a package registry like NPM in our package.json file), finding the correct types to use is often trivial since many packages are directly including them. Let’s look at a few examples:

// beginning with our dependency import

import parse from "parse-diff";

async function getFilePathToChangesLines() {

// ... some async stuff

// that gets the "diffString" arg below

const diffFiles = parse(diffString);

const typesOfInterest = new Set().add("add").add("delete");

const filePathToChangedLines = new Map();

for (let file of files) {

const changedLines = new Set();

for (let chunk of file.chunks) {

for (let change of chunk.changes) {

if (typesOfInterest.has(change.type)) {

changedLines.add(change.ln);

// ^^^^ - compile-time error, as shown below

}

}

}

filePathToChangedLines.set(file.to, changedLines);

}

return filePathToChangedLines;

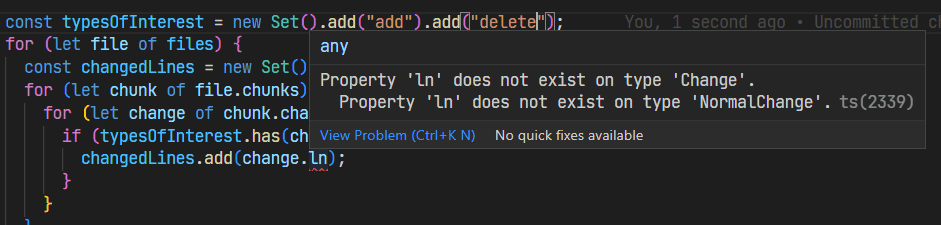

}Here’s the immediate error that shows up:

It’s also possible to surface compile-time errors for the Maps and Sets not using explicit types, but since we’re here and we know there’s a compile-time problem already, let’s get to work. Interestingly, note that this compilation error is already coming from our dependency. In other words, we’ve done nothing to this file except changed its file type from .js to .ts — but because there is a type signature for the parse function, TypeScript is already smart enough to know enough to surface an error to us.

If we use the “Go to Type Definition” on the let change part of our function, above, we’ll be navigated to parse-diffs exported types:

export interface Chunk {

content: string;

changes: Change[];

oldStart: number;

oldLines: number;

newStart: number;

newLines: number;

}

export interface NormalChange {

type: "normal";

ln1: number;

ln2: number;

normal: true;

content: string;

}

export interface AddChange {

type: "add";

add: true;

ln: number;

content: string;

}

export interface DeleteChange {

type: "del";

del: true;

ln: number;

content: string;

}

export type ChangeType = "normal" | "add" | "del";

export type Change = NormalChange | AddChange | DeleteChange;There’s already a crucial piece of info that we’ve learned from looking at these types, so let’s fix the existing error and introduce some new ones:

import parse, { AddChange, ChangeType, DeleteChange } from "parse-diff";

// ... then in our function

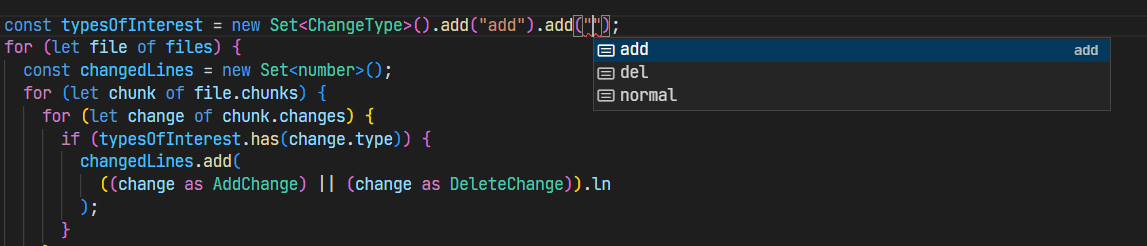

const typesOfInterest = new Set<ChangeType>().add("add").add("delete");

// ^^^^ compile error

const filePathToChangedLines = new Map<string, Set<number>>();

for (let file of files) {

const changedLines = new Set<number>();

for (let chunk of file.chunks) {

for (let change of chunk.changes) {

if (typesOfInterest.has(change.type)) {

changedLines.add(

((change as AddChange) || (change as DeleteChange)).ln

);

}

}

}

filePathToChangedLines.set(file.to, changedLines);

// ^^^^ compile error

}

return filePathToChangedLines;Now we have two messages showing in our Problems terminal pane:

Argument of type '"delete"' is not assignable to parameter of type 'ChangeType'.

Argument of type 'string | undefined' is not assignable to parameter of type 'string'.

Type 'undefined' is not assignable to type 'string'.TypeScript is incredibly good. Once we’ve added the type to typesOfInterest, for example, we even get compile-time safety for random string usage:

It also recognizes guard clauses as protecting against issues like accessing undefined variables. Our final solution for this function (pre-testing, of course), ends up something like:

async function getFilePathToChangesLines() {

const filePathToChangedLines = new Map<string, Set<number>>();

const diffString = await someAsyncFunction();

const files = parse(diffString);

const typesOfInterest = new Set<ChangeType>().add("add").add("del");

for (let file of files) {

if (file.to) {

const changedLines = new Set<number>();

for (let chunk of file.chunks) {

for (let change of chunk.changes) {

if (typesOfInterest.has(change.type)) {

changedLines.add(

((change as AddChange) || (change as DeleteChange)).ln

);

}

}

}

filePathToChangedLines.set(file.to, changedLines);

}

}

return filePathToChangedLines;

}Now, that’s not to say that introducing type signatures magically fixes all problems. For instance, while testing this function out I realized there was an additional condition needed in the guard clause I’d introduced, to avoid having to iterate through deleted files. That’s not the sort of optimization that strong typing is going to surface on your behalf. Already, though, hopefully it’s become apparent that using TypeScript helps us to avoid invalid program states.

Now, not all dependencies directly export the types that we need. For example, since this program was running as a GitHub Action, it wants to interact with the object that GitHub provides for pull requests. Using our very handy “Go to Type Definition” functionality, we can peer into the node_modules folder for GitHub’s code:

// in node_modules\@actions\github\lib\interfaces.d.ts

export interface WebhookPayload {

[key: string]: any;

repository?: PayloadRepository;

issue?: {

[key: string]: any;

number: number;

html_url?: string;

body?: string;

};

pull_request?: {

[key: string]: any;

number: number;

html_url?: string;

body?: string;

};

sender?: {

[key: string]: any;

type: string;

};

action?: string;

installation?: {

id: number;

[key: string]: any;

};

comment?: {

id: number;

[key: string]: any;

};

}In this case, we’d like to interact with the pull_request entity that’s part of this WebhookPayload interface — but there’s no exported type signature for it. Are we doomed? No! Here’s how easy TypeScript makes it to re-create a type when the signature for it isn’t explicitly defined or exported:

import { context } from "@actions/github";

export type GithubPullRequest = typeof context.payload.pull_request | undefined;The typeof keyword creates a type from what’s been shown above. We partner that with | undefined to accurately represent what the question mark in pullRequest? as a property means — that a specific property can be undefined. As in the parse-diff example above, that forces any caller interacting with pullRequest to explicitly perform null checks:

import { GithubPullRequest } from "./theFileItsDefinedIn";

function someFunction(pullRequest: GithubPullRequest) {

const commit_id = pullRequest.head.sha;

// ^^^^^^^^^^^ compile time error

}The compiler immediately informs us:

'pullRequest' is possibly 'undefined'.And that error won’t go away till we’ve fully safeguarded the call:

function someFunction(pullRequest: GithubPullRequest) {

const commit_id = pullRequest?.head?.sha;

}Strong types make some errors impossible — that’s why I love them.

Working With SFDX In TypeScript

While it’s always possible to delegate to the command line when it comes to executing SFDX commands, scripting as a whole tends to be more finicky than programming. Even for the bash and powershell pros among us, though, there’s another reason one might be tempted to at least want to interact with SFDX as just another JavaScript module — bundling. I’d highly recommend reading that article if you aren’t familiar with the term, but the TL;DR is this: bundling is a process that sits between your code compiling and it being called externally. A bundler goes through all of your code and copies and pastes all of the dependencies you rely on to be part of that code. It then goes through all of your dependencies’ dependencies and does the same thing (for any function you’re using; a good bundler tries very hard to only import relevant code), and so on and so on until it’s built up only the code necessary for your project to run. Particularly for GitHub Actions, where your repoistory must include all of the dependent code you rely on, bundling can make an enormous difference when it comes to:

- local development speed (there’s nothing like having 5 million lines of

node_modulescode checked into Git to slow down your IDE and git process!) - external usage speed. Did you know there are significant climate impacts related to things like reducing how many packets are required to transmit data online?. A little goes a long way, in other words

Importing SFDX As A Dependency

When it comes to working with SFDX, it should be possible to interact with it as you would any other dependency:

import sfdx from "sfdx-cli";

// and in your code, maybe something like:

const deployResult = await sfdx.force.source.deploy("some/path.cls");

if (deployResult.status !== 0) {

// handle the error accordingly

}That’s a … purely hypothetical look at what interacting with SFDX as a JavaScript/TypeScript object might look like.

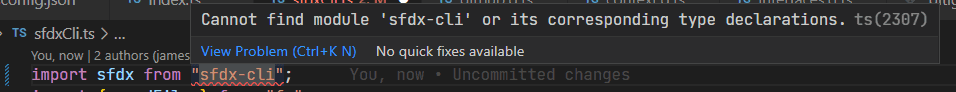

But here’s what happens in TypeScript when we try that:

Uh oh! OK, so there’s no default export from SFDX. That’s no big deal. Let’s do a little spelunking in node_modules/sfdx-cli:

│

└───bin

│ │ dev.sh

│ │ run

│ │ run.cmd

│ │ run.sh

│

└───dist

│

└───scripts

│If you aren’t familiar with the way these things generally work, bin is where it’s common to find executables for running bundled code (SFDX is also written in TypeScript); dist is where the transpiled (TypeScript -> JavaScript conversion) code is typically stored. Peeking into the run file with no file extension yields our search’s first bingo:

const cli = require("../dist/cli");

const pjson = require("../package.json");

// ... some other stuff

const version = pjson.version;

const channel = "stable";

cli

.create(version, channel)

.run()

.then(function (result) {

modules.dump();

require("@oclif/command/flush")(result);

})

.catch(function (err) {

modules.dump();

require("@oclif/errors/handle")(err);

});There are two really promising pieces of this puzzle that have just come together:

- We can adopt this code nearly as is

- Since the

run()function is a Promise, and thethen()promise seems to expect there to be a result, we may be able to gain programmatic access to the output of any given SFDX command 🥳

Let’s move this into a new TypeScript file, sfdxCli.ts:

import * as cli from "sfdx-cli/dist/cli";

export const sfdx = (() => {

// we want to take the current version used by OUR package.json

// and the "create" function throws if we are using NPM-supported

// characters like "^" in our version number

const currentCliVersion: string = require("../package.json").dependencies[

"sfdx-cli"

].replace(/>(|=)|~|\^/, "");

return cli

.create(currentCliVersion, "stable")

.run()

.catch((_: Error) => {});

})();The statement at the top still complains until you also add a typed file for SFDX:

// in sfdxCli.d.ts

declare module "sfdx-cli/dist/cli";If we were to transpile this code as is by running tsc, a new file would be generated at lib/sfdxCli.js (or whatever outDir is specified in your tsconfig.json file). If I run node lib/sfdxCli.js scanner:run, I’m greeted with the familiar output I’d get if I had run sfdx scanner:run from my command line:

ERROR running scanner:run: Missing required flag:

-t, --target TARGET source code location

See more help with --helpSo — that’s really great. But it brings up something that’s … kind of missing from the example we saw in the run file — how are command line arguments interpreted by the CLI? Answering that question led me down a long rabbit hole, which I’ll mostly spare you from. The “answer” such as it is, comes from the create command that we’re importing from sfdx-cli/dist/cli:

const config_1 = require("@oclif/config");

const path = require("path");

function create(version, channel, run, env = env_1.default) {

const root = path.resolve(__dirname, "..");

const pjson = require(path.resolve(__dirname, "..", "package.json"));

const args = process.argv.slice(2);

// ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ - BINGO

return {

async run() {

const config = new config_1.Config({

name: (0, ts_types_1.get)(pjson, "oclif.bin"),

root,

version,

channel,

});

await config.load();

// some other stuff that's not really important

return run ? run(args, config) : await SfdxMain.run(args, config);

},

};

}

exports.create = create;Turns out — and this is not at all uncommon for CLI applications written in Node — that process.argv (a list of Strings) is used. If I make a simple Node file and run it to log the output of that list, here’s what I see:

[

C:\\Program Files\\nodejs\\node.exe',

~\\Documents\\Code\\repo\\test.js'

]So without passing anything at all, we can see why .slice(2) is being used — the first two entries in the list are always the location of node, and the path to the file you’re running. Which leaves us with something like this:

const cli = async <T>(cliArgs: string[]) => {

const currentCliVersion: string = require("../package.json").dependencies[

"sfdx-cli"

].replace(/>(|=)|~|\^/, "");

// create a copy of what the current argv's are to reset to later

// the CLI parses everything after the 2nd argument as "stuff that gets passed to SFDX"

const originalArgv = [...process.argv];

process.argv = ["node", "unused", ...cliArgs];

const result = (await sfdxCli.create(currentCliVersion, "stable").run()) as T;

process.argv = originalArgv;

return result;

};Note that at present I’ve made this a generic method; I don’t know if every SFDX command will return the same thing. I’ve also removed the IIFE statement since I don’t need to invoke this from the command line; I need it to work when called from within the GitHub Action as a regular function would.

Since at the moment I only need to run the scanner:run command, there’s a bit more encapsulation that we can do (to hide the ugliness of passing a list of strings around):

export type ScannerFinding = {

fileName: string;

engine: string;

violations: ScannerViolation[];

};

export type ScannerViolation = {

category: string;

column: string;

endColumn: string;

endLine: string;

line: string;

message: string;

ruleName: string;

severity: number;

url?: string;

};

// the cli method, same as before, then:

export async function scanFiles(scannerCliArgs: string[]): ScannerFinding[] {

const resultJson = await cli<string>([

"scanner:run",

"--json",

...scannerCliArgs,

]);

return JSON.parse(resultJson);

}Still not totally ideal since scanFiles doesn’t necessarily know how many arguments are going to be passed to it. Since this is a GitHub Action we’re talking about — and since some of the arguments are optional — we can once again use the power of TypeScript to express how we’d like for these parameters to be passed:

export type ScannerFlags = {

category?: string;

engine?: string;

env?: string;

eslintconfig?: string;

pmdconfig?: string;

// note that target is NOT optional!

target: string;

tsConfig?: string;

};

// ...

export async function scanFiles(scannerFlags: ScannerFlags): ScannerFinding[] {

const scannerCliArgs = (

Object.keys(scannerFlags) as Array<keyof ScannerFlags>

).map(

(key) => `${scannerFlags[key] ? `--${key}="${scannerFlags[key]}"` : ""}`

);

const resultJson = await cli<string>([

"scanner:run",

...scannerCliArgs,

"--json",

]);

return JSON.parse(resultJson);

}This is really nice, and now our test surface is really small too — let’s move on to unit testing!

Unit Testing In TypeScript

Luckily, Jest is extremely well-supported within the TypeScript community, which means if you’ve done any Advanced LWC Jest Testing or even read articles like LWC Modal Cleanup & Testing, you’re probably already familiar with using Jest to test your Lightning Web Components. There are only a few subtle differences when starting down this path, as is evidenced by some import statements at the top of our test file sfdxCli.test.ts. I’ve also gone ahead and created an example Apex class that should have at least a few violations associated with it:

@IsTest

private class ExampleClass {

@IsTest

static void itHasNoAsserts() {

// should throw a scanner violation!

}

}And then the start of our Jest tests:

import { expect, it, describe } from "@jest/globals";

import { scanFiles } from "../src/sfdxCli";

describe("CLI tests!", () => {

it("reports violations successfully", async () => {

const scannerFlags = {

engine: "pmd",

target: path.join(process.cwd(), "__tests__/ExampleClass.cls"),

};

const findings = await scanFiles(scannerFlags);

expect(findings).toBeTruthy();

const scannerViolations = findings[0].violations;

expect(scannerViolations.length).toBe(2);

expect(

scannerViolations.find(

(violation) =>

violation.ruleName === "ApexUnitTestClassShouldHaveAsserts"

)

).toBeTruthy();

expect(

scannerViolations.find(

(violation) => violation.ruleName === "EmptyStatementBlock"

)

).toBeTruthy();

});

});Initially, the test hung and timed out after the default Jest timeout of 5 seconds had been reached. You can imperatively tell Jest to time out after a longer time period, and my initial thought was that invoking the scanner and getting the result back was simply taking longer than that:

/**

* Totally goofy hack that allows the CLI to return data to us

* during tests - in local runs, this was taking about 9 seconds

* to complete scans, using 20 seconds for now as a safe buffer

*/

jest.useFakeTimers();

jest.setTimeout(20000);

describe("CLI tests!", () => {

// ...

});But no matter what duration was applied to the setTimeout call, the test would simply hang. I could see SFDX messages successfully appearing in my console, so I knew the CLI was being invoked properly, but clearly there was some kind of holdup with one of SFDX’s dependencies. I finally (after an hour or so of painful debugging) isolated the issue to one of the last commands ran by the CLI, which was referencing one of the base @oclif dependencies (at least, for the version of the CLI that the current version of the GitHub Action was pinned to). Like mocking in LWC tests, mocking in basic Jest is just as simple:

// this is the dependency that was hanging on an

// unresolved Promise in my tests - note how

// I tell the "flush" call below to immediately resolve

jest.mock("@oclif/errors/lib/logger", () => {

return {

Logger: class Logger {

log = jest.fn();

flush = jest.fn().mockResolvedValue("");

},

};

});And now the test started to run. Here, I once again encountered an unexpected error to the older version of SFDX that was being used. If you recall from the SFDX excerpt I showed when initially demoing how the command line arguments are parsed by the CLI, at the top-level of the application the CLI is properly returning whatever happens. However, in this specific version of SFDX, the very next function called looks like this:

async runCommand(id: string, argv: string[] = []) {

debug('runCommand %s %o', id, argv)

const c = this.findCommand(id)

if (!c) {

await this.runHook('command_not_found', {id})

throw new CLIError(`command ${id} not found`)

}

const command = c.load()

await this.runHook('prerun', {Command: command, argv})

const result = await command.run(argv, this)

// ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

await this.runHook('postrun', {Command: command, result: result, argv})

}And, alas, what that const result line means is that if a particular SFDX command does not specifically implement a postRun hook that handles the result call, it’s abandoned right before passing the data back to the consumer. Newer versions of SFDX instead use a (very similar but updated) version of this function that does return the result:

async runCommand<T = unknown>(id: string, argv: string[] = [], cachedCommand?: Command.Loadable): Promise<T> {

debug('runCommand %s %o', id, argv)

const c = cachedCommand || this.findCommand(id)

// ... a lot more stuff, this function has really grown in size!

// ... and then finally 👇

const command = await c.load()

await this.runHook('prerun', {Command: command, argv})

const result = (await command.run(argv, this)) as T

// ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

await this.runHook('postrun', {Command: command, result: result, argv})

return result

// ^^^ 👆

}Now, does this mean that older users of the CLI are doomed? Once again, in our specific situation, the answer is no — because the scanner:run command supports writing to the file system. Our implementation ends up looking like this:

// in sfdxCli.ts

import * as sfdxCli from "sfdx-cli/dist/cli";

import { readFile } from "fs";

export const FINDINGS_OUTPUT = "sfdx-scanner-findings.json";

type InternalScannerFlags = ScannerFlags & {

outfile: string;

};

// all the other type signatures, and then ...

const cli = async <T>(cliArgs: string[]) => {

const currentCliVersion: string = require("../package.json").dependencies[

"sfdx-cli"

].replace(/>(|=)|~|\^/, "");

// create a copy of what the current argv's are to reset to later

// the CLI parses everything after the 2nd argument as "stuff that gets passed to SFDX"

const originalArgv = [...process.argv];

process.argv = ["node", "unused", ...cliArgs, "--json"];

// this is currently a void method, which is a bit unfortunate as the "run" function

// retains the response returned by any sub-command until the penultimate moment

// which locks us into using the FINDINGS_OUTPUT file to get around this restriction.

// long-term, I hope to be able to convince the SFDX team to propagate command responses directly

// which would allow them to be awaitable and parseable right here

const result = (await sfdxCli.create(currentCliVersion, "stable").run()) as T;

process.argv = originalArgv;

return result;

};

export async function scanFiles(

scannerFlags: ScannerFlags

): Promise<ScannerFinding[]> {

const internalScannerFlags = {

outfile: FINDINGS_OUTPUT,

...scannerFlags,

};

const scannerCliArgs = (

Object.keys(internalScannerFlags) as Array<keyof InternalScannerFlags>

)

.map<string[]>((key) =>

internalScannerFlags[key]

? ([`--${key}`, internalScannerFlags[key]] as string[])

: []

)

.reduce((acc, [one, two]) => (one && two ? [...acc, one, two] : acc), []);

await cli(["scanner:run", ...scannerCliArgs]);

return new Promise((resolve) => {

readFile(FINDINGS_OUTPUT, (_, data) => {

resolve(JSON.parse(data.toString()));

});

});

}Our full Jest test class ends up looking like this:

import { afterEach, expect, it, describe } from "@jest/globals";

import fs from "fs";

import path from "path";

import { FINDINGS_OUTPUT, scanFiles } from "../src/sfdxCli";

// otherwise some of the underlying methods that SFDX relies on

// try to resolve while jest is tearing down each test

jest.useFakeTimers();

jest.setTimeout(20000);

jest.mock("@oclif/errors/lib/logger", () => {

return {

Logger: class Logger {

log = jest.fn();

flush = jest.fn().mockResolvedValue("");

},

};

});

describe("CLI tests!", () => {

afterEach(() => {

try {

fs.unlinkSync(FINDINGS_OUTPUT);

} catch (_) {

// no-op

}

});

it("reports violations successfully", async () => {

const scannerFlags = {

engine: "pmd",

target: path.join(process.cwd(), "__tests__/ExampleClass.cls"),

};

const findings = await scanFiles(scannerFlags);

expect(fs.statSync(FINDINGS_OUTPUT).isFile()).toBeTruthy();

expect(findings).toBeTruthy();

const scannerViolations = findings[0].violations;

expect(scannerViolations.length).toBe(2);

expect(

scannerViolations.find(

(violation) =>

violation.ruleName === "ApexUnitTestClassShouldHaveAsserts"

)

).toBeTruthy();

expect(

scannerViolations.find(

(violation) => violation.ruleName === "EmptyStatementBlock"

)

).toBeTruthy();

});

});And now the test passes without issue. Again, since this specific command allows us to interact with the file system, things ended up being OK. But … it’s pretty ugly.

It would be really nice to not have to use the file system as an intermediary, so I filed an issue on GitHub with the oclif/config repo which started a conversation that led me to updating the pinned sfdx-cli dependency for this GitHub action. But (as of the time of this writing) even the latest version of @oclif doesn’t fully propagate the result of SFDX commands all the way up the call stack used by SFDX — so I filed an issue on GitHub with the oclif/core repo and got to work fixing it.

But let’s pretend, for a moment, that this issue was already fixed — and that we could use the up-to-date version of SFDX with this updated dependency.

Update: since originally publishing the article this morning, my contribution was merged in — now by explicitly pinning to the updated @oclif dependency, the below “just works”:

// in package.json

// this is the new version that was generated this morning

"overrides": {

"@oclif/core": "^1.24.2"

}Let’s see what the updated version of the code — and tests! — would look like:

// in sfdxCli.ts

import * as sfdxCli from "sfdx-cli/dist/cli";

export type ScannerFinding = {

fileName: string;

engine: string;

violations: ScannerViolation[];

};

export type ScannerFlags = {

category?: string;

engine?: string;

env?: string;

eslintconfig?: string;

pmdconfig?: string;

target: string;

tsConfig?: string;

};

export type ScannerViolation = {

category: string;

column: string;

endColumn: string;

endLine: string;

line: string;

message: string;

ruleName: string;

severity: number;

url?: string;

};

const cli = async <T>(cliArgs: string[]) => {

const currentCliVersion: string = require("../package.json").dependencies[

"sfdx-cli"

].replace(/>(|=)|~|\^/, "");

// create a copy of what the current argv's are to reset to later

// the CLI parses everything after the 2nd argument as "stuff that gets passed to SFDX"

const originalArgv = [...process.argv];

process.argv = ["node", "unused", ...cliArgs];

const result = (await sfdxCli.create(currentCliVersion, "stable").run()) as T;

process.argv = originalArgv;

// you could do something fancier here, but it also "just works" as is

return result;

};

export async function scanFiles(

scannerFlags: ScannerFlags

): Promise<ScannerFinding[]> {

// the "as Array<keyof ScannerFlags>

// part below is necessary because Object.keys is

// not generic in TypeScript, for whatever reason -

// but that bit allows our callback function for .map

// to be strongly typed

const scannerCliArgs = (

Object.keys(scannerFlags) as Array<keyof ScannerFlags>

)

// the "as string[]" section here tells TypeScript that, due to the ternary

// we know for SURE that there is an entry for every item in the inner list

// whereas by default the type would be "string | undefined[]" - in other words

// a list that either has strings or undefined entries

.map<string[]>((key) =>

scannerFlags[key] ? ([`--${key}`, scannerFlags[key]] as string[]) : []

)

// lastly, we flatten the list of lists

.reduce((acc, [one, two]) => (one && two ? [...acc, one, two] : acc), []);

return cli<ScannerFinding[]>(["scanner:run", ...scannerCliArgs, "--json"]);

}And then the test becomes much simpler:

import { expect, it, describe } from "@jest/globals";

import path from "path";

import { scanFiles } from "../src/sfdxCli";

// otherwise some of the underlying methods that SFDX relies on

// try to resolve while jest is tearing down each test

jest.useFakeTimers();

jest.setTimeout(20000);

describe("CLI tests!", () => {

it("reports violations successfully", async () => {

const scannerFlags = {

engine: "pmd",

env: "", // ensure invalid flags aren't passed

target: path.join(process.cwd(), "__tests__/ExampleClass.cls"),

};

const findings = await scanFiles(scannerFlags);

expect(findings).toBeTruthy();

const scannerViolations = findings[0].violations;

expect(scannerViolations.length).toBe(2);

expect(

scannerViolations.find(

(violation) =>

violation.ruleName === "ApexUnitTestClassShouldHaveAsserts"

)

).toBeTruthy();

expect(

scannerViolations.find(

(violation) => violation.ruleName === "EmptyStatementBlock"

)

).toBeTruthy();

});

});This is really nice. It’s simple, it’s effective, and it doesn’t have to jump through file system hoops — adding and deleting files — in order to test our usage of SFDX. There is the matter of the --json flag being appended to the scan command — in my own testing, I found that the nature of the data that was returned differed (at least for the scanner:run command) based on whether or not that flag was present. If it was not present, for example, only the ScannerViolation[] list was returned by the CLI, instead of the outer list for the ScannerFinding type (which includes additional helpful properties, like the engine where the scannion violates occurred, as well as the file name!

I don’t know if every command within SFDX behaves like that, but it certainly seems like for the scanner:run command defaulting to including --json isn’t just nice — it’s necessary to get all of the information that we need. I do know that --json is not a valid flag for all SFDX commands, so it can’t be blindly passed to all cli invocations. It’s a balancing act!

Wrapping Up

I’m grateful that my proposed change to oclif was accepted quickly. Even for older dependency versions, we have a viable workaround for any SFDX command which has an --output flag, which is great. I hope it was interesting to learn more about how the CLI can be interacted with when working within TypeScript. On the subject of bundling — the initial implementation of this cutover to TypeScript retained the assumption that @salesforce/sfdx-scanner was going to be installed before using the action. I later ended up adding the scanner as part of what gets bundled, but I wanted to return to how bundling can really help:

- old implementation, all

node_modulespart of the action: > 300 MB of dependencies downloaded per action run - original TypeScript implementation, with just SFDX bundled: ~18 MB of dependences downloaded per action run

- updated TypeScript implementation, with SFDX and the scanner bundled: ~80 MB of dependencies downloaded per action run

Even with all of eslint and things like PMD included, in other words, there’s a massive amount of savings to be had by making use of bundling!

Due to its incredible tooling support and exceptionally expressive type-system, I’d be hard-pressed to reach for a better language than TypeScript when the alternative is vanilla JS. Perhaps this post will inspire some of you to create strongly-typed Github Actions of your own — that would make me very happy. This (open source DevOps) is a still-nascent part of the Salesforce ecosystem, and it’s an area ripe with opportunity.

Thanks, as always, to Henry Vu for his support of me on Patreon. Till next time!